The CPUs Powering Today’s Parallel-Processing Boom

Transforming Data Centers Into Supercomputers

| Welcome to Porter & Co.! If you’re new here, thank you for joining us… and we look forward to getting to know you better. You can email Lance, our Director of Customer Care, at this address, with any questions you might have about your subscription… The Big Secret on Wall Street… how to navigate our website… or anything else. You can also email our “Mailbag” address at: [email protected]. Paid subscribers can also access this issue as a PDF on the “Issues & Updates” page here. |

For the past three weeks in The Big Secret on Wall Street, we have detailed, like no one else has, the technological transformation that is driving artificial intelligence, machine learning, and so much more. We call this big story The Parallel-Processing Revolution.

On July 12, we’ll dig deeper into the exponential transformations taking place in computing right now. And on July 19, we will conclude the series with a look at the energy and data-storage needs required to propel this revolution over the next few decades. Next week, we will provide an Independence Day special report.

In honor of Porter & Co.’s second anniversary, we’re making this vital six-part series free to all our readers. You’ll get a high-level view of this tech revolution and receive several valuable investment ideas along the way. However, detailed recommendations and portfolio updates will be reserved for our paid subscribers. If you are not already a subscriber to The Big Secret on Wall Street, click here…

Here are links to Part 1, which provides a series overview and tells how chipmaker Nvidia led the industry switch to parallel computing, Part 2, chronicling two near-monopoly companies that power the production of chipmaking globally, and Part 3, which compared the current investment opportunities with those of the internet boom 25 years ago.

We share Part 4 of this series with you below.

No one insulted Sir Alan Sugar’s pet robot and got away with it.

Especially not cheeky technology journalist Charles Arthur… who’d dared to suggest in a 2001 article in British online newspaper The Independent that Lord Sugar’s latest invention was a “techno-flop”… difficult to operate and even harder to sell.

Sugar’s brainchild, the E-M@iler – a clunky landline phone with attached computer screen that allowed you to check your email – wasn’t flying off the shelves of British electronics retailers. With smartphones still a decade or so away, the idea of browsing emails on your telephone just seemed bizarre.

And the E-M@iler – despite its appealing, friendly-robot presence – had plenty of drawbacks: users were charged per minute… per email… and were bombarded by non-stop ads on the tiny screen. Customers’ phones were auto-billed each night when the E-M@iler downloaded the day’s mail from the server. (By the time you’d owned the phone for a year, you’d paid for it a second time in hidden fees and charges.)

But scrappy Sir Alan, who’d grown up in London’s gritty East End and now owned England’s top computer manufacturer, Amstrad, wasn’t about to admit that he’d pumped 6 million pounds, and counting, into a misfire.

Sugar wasn’t afraid to fight dirty. And he had a weapon that Charles Arthur, the technology critic, didn’t: 95,000 email addresses, each one attached to an active E-M@iler.

On an April morning in 2001, Lord Sugar pinged his 95,000 robots with a mass email. It was a call to arms.

I’m sure you are all as happy with your e-mailer as I am,” he wrote. “The other day… the technology editor of the Independent said that our e-mailer was a techno-flop… It occurred to me that I should send an email to Mr Charles Arthur telling him what a load of twaddle he is talking. If you feel the same as me and really love your e-mailer, why don’t you let him know your feelings by sending him an email.

In a move that would likely get him in legal trouble today, Sugar included the offending critic’s email address… then sat back and waited.

A week or so later, the unsuspecting Charles Arthur returned from vacation to find his inbox bursting with 1,390 messages.

But – in a surprise turn of events for Lord Sugar – most of the missives were far from glowing endorsements of the E-M@iler…

Instead, scores of ticked-off robot owners took the opportunity to complain to the press about their dissatisfaction with their purchase. “This is by far the worst product I have bought ever.” “I won’t be getting another one, I think they’re crap.” “This emailer I am testing is the second one and I now know that it performs no better than the first one.” “I WANTED TO SAY THAT I’M NOT HAPPY WITH MINE!!!” And so on.

The whole story – including the flood of bad reviews – made the news and made Lord Sugar, probably rightly, out to be an ass.

And the bad news kept piling up for him. Over the next five years, the E-M@iler continued to disappoint. In 2006, the price was cut from £80 to £19, with Amstrad making a loss on every unit. After a string of additional financial and operational business misfires, Lord Sugar sold Amstrad to British Sky Broadcasting for a fire-sale price of £125 million… a far cry from its peak £1.3 billion valuation in the ‘80s.

Fortunately for Sir Alan Sugar, he found a fulfilling second career post-Amstrad. Today, he serves as Britain’s answer to Donald Trump, yelling “You’re fired!” at contestants on the long-running English version of the TV show The Apprentice.

In a way, though, the E-M@iler fired Lord Sugar first.

It was an ignominious finish for a company that, in the 1980s, had dominated 60% of the market share for home computers in England.

And in the end, Sugar’s most lasting contribution to computer technology wasn’t even something he created directly. Today, we have the super-computer chip that’s the “brains” of the Parallel Processing Revolution… largely because Lord Sugar got in a fight.

A Call to ARM1

From his childhood days hawking soda bottles on the streets of London, Alan Sugar was a hustler. He launched his technology company, Amstrad (short for Alan Michael Sugar Trading) selling TV antennas out of the back of a van in 1968, then graduated to car stereos in the ‘70s and personal computers in the ‘80s.

With the 1980s personal computer boom – as technology advanced, and formerly massive mainframes shrunk down to desktop size – came fierce competition….

On the American side of the pond, Apple and Microsoft jockeyed for the pole position (a dance that still continues today). The English home-computer arms race wasn’t as well known outside of trade publications… but in the early ’80s, British nerds would have drawn swords over the burning question of “Acorn or Sinclair.”

Acorn (known as the “Apple of Britain” ) was the more sophisticated of the two, with a long-standing contract to make educational computers for the BBC (British Broadcasting Company), while Sinclair traded on mass appeal, producing Britain’s best-selling personal computer in 1982. (Their feud was dramatized in a 2009 BBC documentary called The Micro Men.)

Into this fray waded – you guessed it — Sir Alan Sugar, who knew nothing about programming or software but was determined to propel his protean tech company, Amstrad, to the top of the industry. He did this in the simplest way possible: He bought the more popular combatant, Sinclair, wholesale in 1986.

Just as he’d hoped, the existing Sinclair product line — plus a successful monitor-keyboard-printer combo designed by Sugar himself – propelled Sugar’s company to a £1.3 billion valuation by the mid-’80s.

Tiny £135 million Acorn, left out in the cold, couldn’t compete. Or could it?

Acorn fought valiantly before selling out to an Italian firm and ultimately bowing out of the computer biz in the early ’90s. And out of that effort grew the super-speedy chip that, today, powers the Parallel Processing Revolution.

Acorn’s proprietary chip, called ARM1, was based on a “reduced instruction set computer” architecture, or RISC. It was 10 times faster than the “complex instruction set computer” (CISC) CPU chips in Lord Sugar’s computers – and it could be manufactured more cheaply, too. The ARM1-powered computer retailed at one-third the price point of a mainstream PC.

But speedy or not, Acorn’s specialized computers couldn’t best Lord Sugar’s mass-produced PCs. Unlike Sugar’s IBM-compatible computers, Acorn’s machines couldn’t run the popular Microsoft Windows operating system.

Without Windows – and without Lord Sugar’s flair for headline-grabbing chaos — the ARM1-powered computer launched with little fanfare, selling just a few hundred thousand units over the next several years.

But long after Lord Sugar boxed up his E-M@ilers and joined the set of The Apprentice… and long after Acorn cashed in its chips and closed its doors… the ARM1 chip has survived and thrived, thanks to a spinoff partnership with Apple.

And ultimately, that tiny piece of silicon developed into the “brain” that drives Nvidia’s supercomputing architecture… and powers the Parallel Processing Revolution today.

Remarkably – like the GPU (graphic processing unit) monopoly we explored in our June 7 issue – the intellectual property to this chip technology is controlled today by just one company: ARM Holdings (Nasdaq: ARM). While it doesn’t get much attention in the media, ARM is poised to become one of the biggest winners from today’s parallel-computing revolution.

The Company That Ties It All Together

In our June 7 issue, which kicked off our Parallel Processing Revolution series, we explained how Nvidia is far more than just an artificial intelligence (“AI”) chipmaker. Over the last two decades, the company has laid the foundation for a technological revolution – one that’s now changing the very concept of what a computer is.

The combination of Nvidia’s super-powered GPUs that greatly increase the processing speed of computing, and its CUDA (Compute Unified Device Architecture) software network, unlocked the parallel-processing capacity required for training the large language models (“LLM”) powering today’s AI revolution. But that was just the start. The massive computational workloads of training LLMs – which scan large amounts of data to generate simplified, human-like text – presented a new challenge beyond computing speeds. The process of training LLMs to recognize patterns across huge swaths of data created an explosion in memory demand.

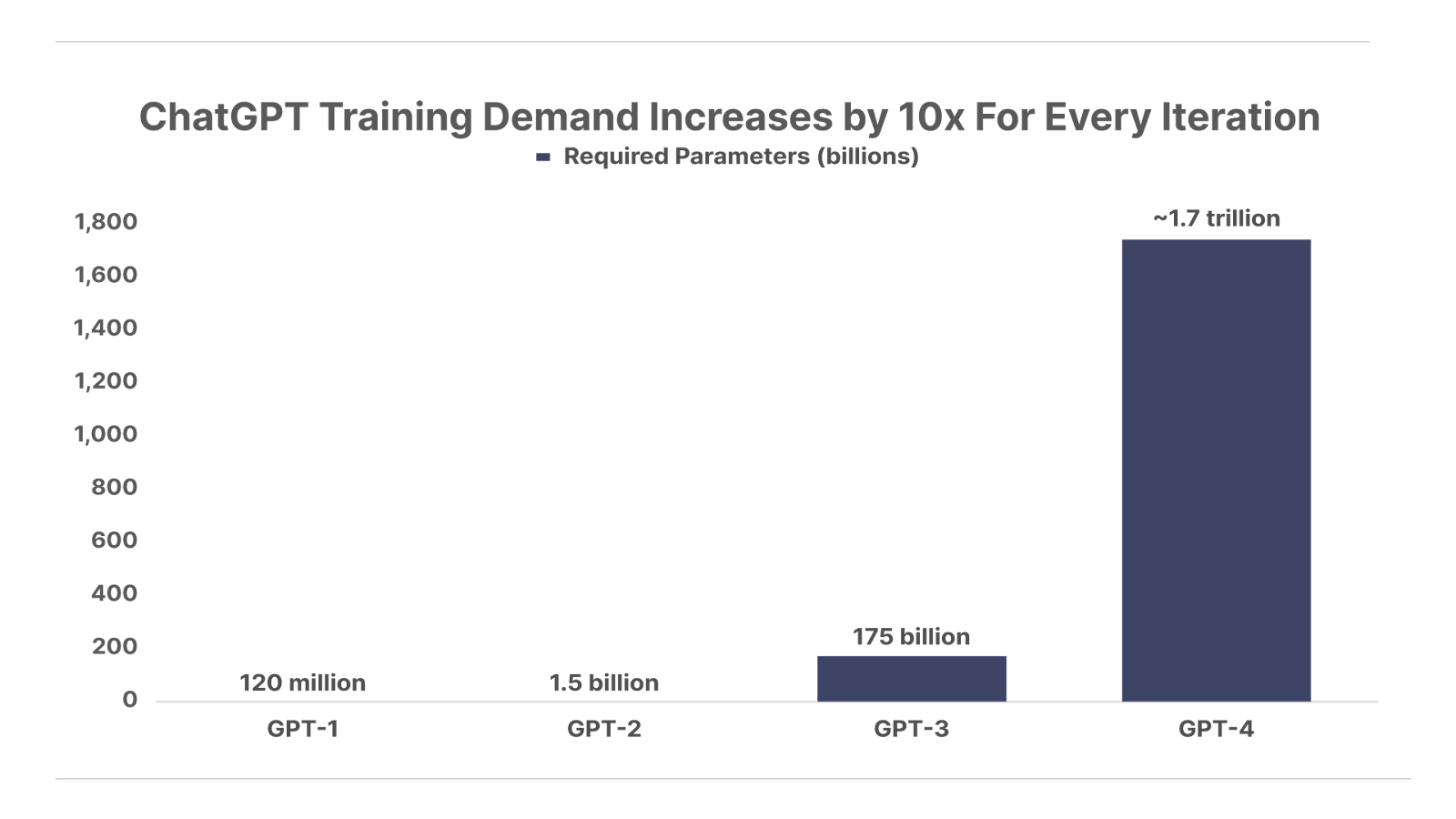

Consider the number of data points, known as parameters, used to train today’s cutting-edge LLMs like ChatGPT. Training the first GPT-1 model in 2018 required 120 million parameters. Each new iteration required an exponential increase in training data. ChatGPT-2 was trained on 1.5 billion parameters in 2019, followed by 175 billion for ChatGPT-3 in 2020. The number of parameters in the latest iteration, GTP-4, hasn’t been disclosed, but experts estimate it was trained on approximately 1.7 trillion parameters – a 100x increase in a matter of a few years.

LLMs must hold mountains of data in memory while training on these parameters. In computer science, memory capacity is measured in terms of bits and bytes. A bit refers to the smallest data unit that references a single 1 or 0 in binary code. A byte refers to an 8-bit data structure used to reference a single character in memory, such as a letter or number. One trillion bytes make up 1 terabyte – and hundreds of terabytes are required for training today’s most advanced LLMs.

The challenge: even cutting-edge GPUs, like Nvidia’s H100, hold less than 10% of the amount of memory needed for training today’s LLMs – only about 80 gigabytes (one gigabyte equals roughly 1.1 million bytes) of memory are inside each individual H100 chip.

In the data-center architectures of just five years ago, to get more memory, systems engineers would link multiple chips together via ethernet cables. This hack was sufficient to handle most data-center workloads before the age of AI.

But things have changed dramatically in those five years. Now, hundreds of terabytes of memory storage and transmission capacity are needed to power data centers. Almost no one in the industry anticipated that level of change would happen so quickly – but Jensen Huang did. As far back as 2019, the Nvidia CEO foresaw the future need to connect not just a few chips, but hundreds of chips in a data center. Huang reimagined the data center from a series of compartmentalized chips working independently on different tasks, to a fully-integrated supercomputer, where each chip could contribute its memory and processing power toward a single goal – training and running LLMs.

In 2019, only one company in the world had the high-performance cables capable of connecting hundreds of high-powered data-center chips together: Mellanox, the sole producer of the Infiniband cables (mentioned in “The Big Bang That No One Noticed”, on June 7). That year, Nvidia announced its $6.9 billion acquisition of the networking products company. During a conference call with journalists discussing the acquisition, CEO Huang laid out his vision for this new computing architecture:

Hyperscale data centers were really created to provision services and lightweight computing to billions of people. But over the past several years, the emergence of artificial intelligence and machine learning and data analytics has put so much load on the data centers, and the reason is that the data size and the compute size is so great that it doesn’t fit on one computer… All of those conversations lead to the same place, and that is a future where the datacenter is a giant compute engine… In the long term, I think we have the ability to create data-center-scale computing architectures.

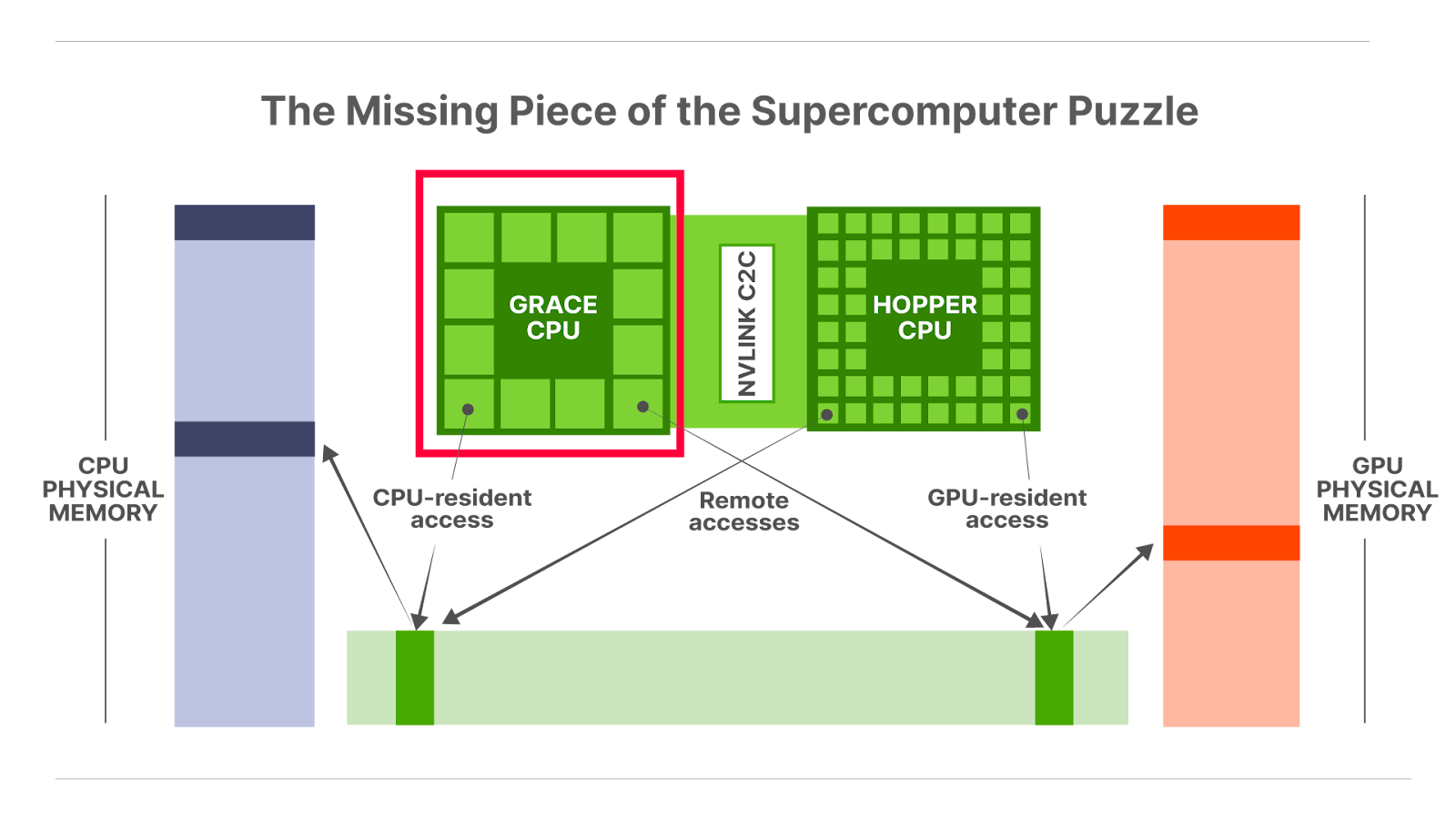

David Rosenthal, host of the tech podcast Acquired, called Nvidia’s purchase of Mellanox “one of the best acquisitions of all time.” It provided the missing link the chipmaker needed to harness the power of hundreds of data-center chips together into a massive and explosively fast architecture. Less than four years after the acquisition, Huang’s supercomputing vision became a reality, in the form of the Grace Hopper Superchip architecture, which combines the Hopper GPU (based on Nvidia’s H100 chip) and the Grace CPU (more on this below).

The big breakthrough in combining these was Nvidia’s ability to package Mellanox Infiniband technology into its proprietary NVLink data-transmission cables. The NVLink system in the Grace Hopper architecture transmits memory data nine times faster than traditional ethernet cables. This enabled Nvidia to connect up to 256 individual Grace Hopper chips together, and tap into the full memory bank of both the Hopper GPU and the Grace CPU.

It was a major step in the parallel processing revolution.

On to the Next Step in the Revolution

The end result: Nvidia transformed its already-powerful data-center GPUs into a supercomputer, with a total addressable memory bank of 150 terabytes. A number that is frankly too large to comprehend, 150 terabytes is 150 trillion bytes of data storage capacity, or nearly 2,000 times the memory capacity of a single H100 GPU, and about 10,000 times that of a typical laptop hard drive.

Therein lies the big breakthrough that has allowed Nvidia to upend the concept of modern-day computing. And no other company has come close to putting together this full ecosystem for transforming the data center into a supercomputer.

Today, Nvidia owns virtually all of the hardware, software, and networking technology necessary to transmit terabytes of data at lightning quick speeds throughout the data center. Chamath Palihapitiya, one of Silicon Valley’s most high-profile venture capitalists, described the significance of the Grace Hopper architecture on the All-In podcast following Nvidia’s release of it:

This is a really important moment where that Grace Hopper design, which is basically a massive system on chip, this is like them [Nvidia] going for the jugular… that is basically them trying to create an absolute monopoly. If these guys continue to innovate at this scale, you’re not going to have any alternatives. And it goes back to what Intel looked like back in the day, which was an absolutely straight-up monopoly. So if Nvidia continues to drive this quickly and continues to execute like this, it’s a one and done one-company monopoly in AI.

However, there’s one piece of the puzzle that Nvidia tried, and failed, to corner the market in. That piece is boxed in red in the diagram below – the Grace CPU – the only ingredient of technology in this system that Nvidia relies on another company for:

Even in today’s new era of parallel computing, there are still some tasks that require running computations in a serial sequence. As a highly simplified analogy, the serial-processing CPU acts like the brains that guide the brawn of the parallel-processing GPU. And just like the human brain, the CPUs in modern-day data centers require huge amounts of energy.

ARM Holdings is the only company in the world that owns the intellectual property underlying the most energy-efficient CPU designs in the market, including the Grace CPU. And while it gets much less attention in the financial press relative to Nvidia’s GPU dominance, ARM holds the keys to the critical CPU designs fueling the current AI arms race. In this issue, we’ll show why ARM is poised to become one of the biggest winners from today’s parallel computing revolution.

A Global Juggernaut Born in a Barn

ARM’s CPU technology and business model has come a long way since its formation as a spinoff from Acorn Computers in 1990. Today, the company no longer produces chips. Instead, it licenses its intellectual property to other semiconductor companies that design chips based on ARM’s technology. In the last 25 years, over 285 billion semiconductors have been manufactured using ARM-based designs. This includes 28.6 billion chips last year alone.

The technology that ARM licenses is known as the “instruction set architecture” (ISA) for semiconductors. This refers to the set of instructions working behind the scenes of every programmable electronic device that determines how the chip hardware interfaces with the device’s software. Think of the ISA as the programming language of the computer chip, which contains a set of commands that a microprocessor will recognize and execute. This is how electronic devices transform the binary language of 1s and 0s into functional outcomes on a given device.

ARM’s exclusive focus on designing chip architectures gives it a discrete place in the industry, as one industry analyst describes:

Most people think about a device. Then maybe if they’re really sophisticated, they think about the chip, but they don’t think about the company that came up with the original ideas behind how that chip operates. But once you understand what they [ARM] do, it’s absolutely amazing the influence they have.

That influence includes a 99% market share in global smartphone CPU architectures, along with a major presence in tablets, wearable devices, smart appliances, and automobiles. ARM’s next big opportunity will come from its aggressive expansion beyond smartphones into the new data-center CPU architectures powering today’s parallel computing revolution.

A key advantage for ARM in today’s data-center CPUs is driven by the same feature that allowed its chip architecture to take over the global smartphone market. To understand the source of this advantage, let’s go back to ARM’s founding when it first broke into the emerging market of handheld devices in the 1990s.

ARM Finds Its Killer App in the Mobile Revolution

In 1990, Apple Computer was three years into developing one of the world’s first personal digital assistants (PDA), the Newton. The company originally hired AT&T to develop the Newton’s CPU, but after a series of setbacks and cost overruns, it ditched AT&T and began searching for a new partner.

Unlike PCs, which plugged into wall outlets, the main constraint of mobile devices was battery life. One shortcoming of AT&T’s CPU was its high energy consumption, which limited the battery life. The chip was based on the industry-standard architecture at the time, known as a “complex instruction set computer” (CISC).

Apple homed in on Acorn’s energy-efficient RISC architecture emerging at the time as the perfect fit for the CPU design in its new mobile device.

The key difference between Acorn’s RISC architecture and the CISC chip designs boils down to how these two approaches make use of something called the clock cycle in a CPU. The clock cycle is the timing mechanism the CPU clock uses to synchronize operations across various chip components.

In a CISC architecture, the CPU performs many different computing tasks (i.e., transferring data, arithmetic operations, or accessing memory) all from a single instruction that can vary in length and complexity, requiring multiple clock cycles to execute.

Conversely, the RISC architecture limits each instruction set to a simpler, fixed-length format that only takes up a single clock cycle. These simpler instructions performed on a single clock cycle enabled RISC-based chips to run more computations, faster, even with less powerful semiconductor chips versus those based on the CISC architecture.

Leaving the PC market behind, Acorn spun off its division dedicated to ARM CPU technology into a joint venture (JV) with Apple to develop the Newton CPU. Apple contributed $2.7 million to acquire 46% of the JV, and Acorn contributed its technology and 12 employees for another 46%. A third company called VLSI Technology – which previously made Acorn’s ARM-based CPU chips – became the manufacturing partner in exchange for the remaining 8% equity stake.

The new entity, formed in 1990, was called Advanced RISC Machines, which later became ARM Holdings.

The ARM design team spent the next three years working closely with Apple engineers to develop a custom CPU chip for the Newton PDA, launched in 1993. Despite achieving all of Apple’s CPU requirements for processing speed and efficiency, the product itself was a flop. The critics said that Apple over-engineered the Newton’s features, building in unnecessary functionality that consumers didn’t want, resulting in a hefty price tag of $700 (about $1,500 in today’s dollars).

ARM’s CEO at the time, Robin Saxby, learned a lesson from the endeavor and realized that the company couldn’t stake its future on single product lines. So he devised what would become an incredibly successful, and highly capital efficient business model that remains in place today: the licensing and royalty model.

Instead of developing chip architectures around individual product lines, ARM would develop platforms of chip architectures, which could be fine tuned based on a specific end market. In this way, ARM could distribute its chip architectures to many different companies, diversifying its risk and tapping into a wider revenue base. And it would charge customers an upfront license fee for using its chip architectures, plus a small royalty (typically 1% to 2% of the total CPU cost) on each chip sold.

Taking ARM to the Next Level

After pivoting to this new model, ARM struck a landmark deal in 1993 with mobile-phone maker Nokia and its chipmaker Texas Instruments. At the time, Nokia was developing the precursors to today’s smartphones, implementing quasi-intelligent applications like text, email, calculators, and games. These features required CPUs, and just as with the Newton, energy efficiency.

As part of the Nokia deal, ARM developed what became the ARM7 family of microprocessor architectures. This architecture went into the CPU of the Nokia 6110 GSM mobile phone, first launched in 1998. The highly energy-efficient ARM7 RISC-based CPU gave Nokia the best battery life on the market, with a total talk time of 3.3 hours and a standby time of 160 hours. This compared with competing phones that provided around two hours of talk time and 60 hours of standby time.

The high-memory capacity and processing speeds of the ARM7 CPU also enabled Nokia to hold more data, and include extra features like games that came pre-downloaded with the phone. One of them, Snake, became a viral sensation with over 350 million copies produced, becoming one of the world’s first major mobile games.

Becoming a status symbol to own, the Nokia 6110 was one of the most popular phones of the mid-1990s. Nokia and ARM flourished together in the years that followed, with new ARM7 architectures developed alongside new Nokia models. This included the 3210, released in 1999, which became the best-selling mobile phone of the era with an incredible 160 million unit sales.

The successful partnership with Nokia not only provided a massive source of licensing and royalty income for ARM, but it led to widespread adoption of the ARM7 platform among other mobile-phone developers. The architecture was ultimately licensed by 165 different companies, and it’s been used in over 10 billion chips.

ARM’s RISC-based architecture became the leading global standard in mobile-phone CPUs by the late 1990s. The company went public in 1998, and rising revenue and profits sent its stock price soaring. That same year, Apple shut down the Newton and began selling its shares in ARM to shore up its financials. Apple sold its original $2.7 million stake for nearly $800 million, saving the future smartphone leader from bankruptcy and providing the breathing room to turn around its business.

Fast forward a decade to 2007, and ARM once again partnered with Apple on a much more promising project: the CPU architecture for the world’s first iPhone. Apple originally selected Intel to develop an x86-based CPU in the first iPhone. However, Intel rejected the offer over pricing, failing to realize the long-term potential of the smartphone market.

As a result, ARM became the go-to supplier for Apple iPhone chips, as well as virtually every other smartphone on the market. Today, ARM’s CPU architectures are found in 99% of global smartphones. Along the way, ARM reinvested its growing stream of profits into other areas suited for its highly energy-efficient designs.

This included the market for digitally-connected internet of things (“IOT”) devices ranging from smart home appliances to a variety of blue-tooth enabled devices, to wearable consumer electronics, like the Apple Watch. ARM-based chips make up 65% of the CPUs in the IOT market today. The company also has a growing presence in CPUs for both traditional vehicles and autonomous-enabled vehicles, including the ARM-based chips used in Tesla’s Autopilot and Full Self-Driving systems. ARM has a 41% market share in automotive CPUs.

But looking ahead, the most promising market for ARM’s RISC-based CPUs lies in the new supercomputing data-center architecture.

The Rise of ARM and Fall of Intel in the Data Center

Starting in the 1990s, Intel began adapting its widely-popular x86 PC architecture into the data center – huge warehouses filled with computers for storing large amounts of data – taking market share from IBM, the industry leader at the time. Intel’s key advantage came from its economies of scale. It was already mass-producing x86-based chips for the PC market, and thus it enjoyed a low-cost advantage given the sheer volume of x86 chip manufacturing capacity. Meanwhile, Intel leveraged its dominant research-and-development (R&D) budget from its highly profitable PC chip business into developing newer, more capable x86-based chips for the data-center market.

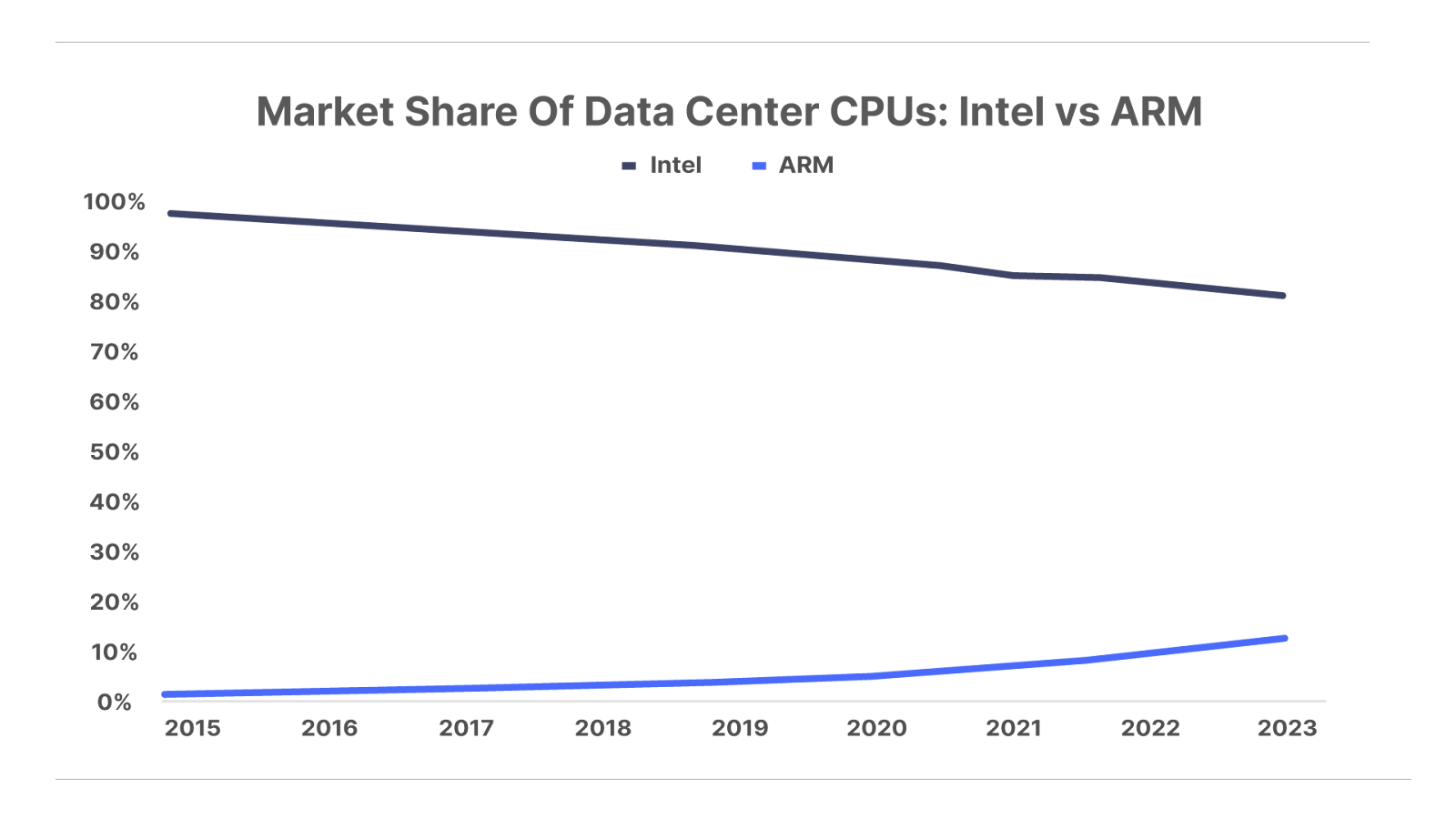

As a result, Intel’s market share in CPUs grew to over 90% by 2017.

ARM’s RISC-based CPUs, meanwhile, held a minuscule single-digit market share position in the data center. As recently as 2020, the company wasn’t yet focused on this sector because the single biggest advantage of its RISC architecture – energy efficiency – took a backseat to price, favoring Intel’s greater economies of scale.

But by 2020, Nvidia CEO Jensen Huang realized that energy demands would explode in the new era of data-center supercomputers. And he also knew that ARM’s energy-efficient RISC architecture would become the new gold standard of data-center CPUs in this new paradigm. This was the missing piece of the supercomputing puzzle Nvidia needed in order to control the entire ecosystem of parallel processing.

At the time, Japanese holding company Softbank owned ARM (it bought the company for $32 billion in 2016). In September 2020, Nvidia made a $40 billion offer to acquire ARM from Softbank, which agreed to the sale. However, the U.S. Federal Trade Commission blocked the sale based on antitrust concerns. Softbank maintained full ownership of ARM until September 2023, when it listed 10% of its stake for sale in a public offering.

Short of owning ARM, Nvidia did the next best thing: it worked closely with ARM on developing a CPU optimized for Nvidia’s new data-center supercomputer architecture. The effort came to fruition in the form of the Grace CPU, paired with the Hopper GPU in the Grace Hopper architecture mentioned above.

Nvidia and ARM worked together to optimize the RISC-based instruction set in the Grace CPU for both performance and energy efficiency. And with great success.

Compared with competing x86 alternatives, the new design boosted the performance of Nvidia’s H100 GPUs by a factor of 30x while reducing energy consumption 25x. The end result: the new design created enough energy savings to run an additional 2 million chatGPT queries versus a comparable x86 CPU chip.

Given that each chatGPT query requires roughly 10x as much energy as a non-AI-powered Google search, this adds up to major cost savings for data-center operators. The immense energy needs of the AI revolution is the key reason why ARM-based CPUs are set to displace Intel’s x86 architecture in the data center going forward.

And unlike the first time ARM went head-to-head against Intel during the PC revolution of the 1980s, it’s now playing from a position of superior strength. One of the key features of building an enduring competitive moat around computing technologies – whether in software, hardware, or instruction set architectures – is building a robust developer ecosystem around that technology. A developer ecosystem refers to the network of external software and hardware engineers that help advance a technology platform.

When Intel and Microsoft created what was called the Wintel duopoly starting in the 1980s, the dominance of this software-hardware regime led to a robust developer ecosystem that by the late 1990s grew to millions of third-party software and hardware engineers. This ecosystem helped Microsoft develop new software features for advancing its most popular applications, like Excel and Word.

A similar network of developers sprang up to advance the x86 chip architecture. This created a self-reinforcing cycle: the progression of new Windows features and x86 processing capabilities made it the go-to choice for virtually all major PC manufacturers. This, in turn, provided a powerful draw for attracting more developers into the Wintel ecosystem, leading to further technological dominance, and so on.

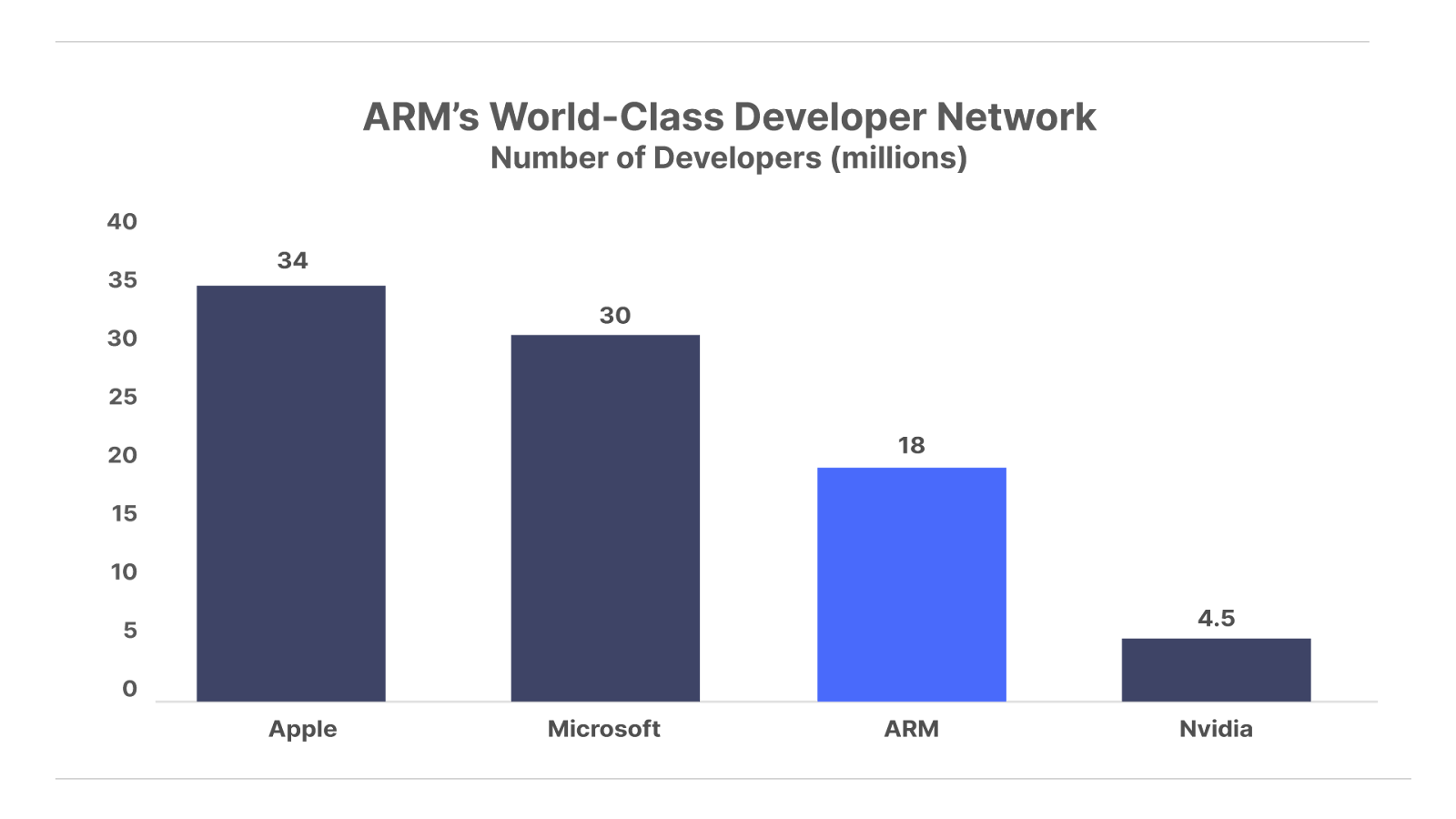

Now, ARM is taking a page from the same playbook by partnering with the new king of the data center – Nvidia. Over the last several years, Nvidia has begun optimizing its CUDA software platform to mesh with ARM’s CPU architecture. This has allowed Nvidia’s 4.5-million-strong developer network to begin designing data-center applications around ARM’s RISC-based CPU architecture.

But that’s just the tip of the iceberg. ARM has also invested heavily into creating its own world-class developer network over the last 35 years. The company now has around 18 million developers who have contributed 1.5 billion hours toward everything from chip designs and operating system integrations to software tools. This puts ARM’s developer network at four times the size of Nvidia’s, and more than half the size of tech giants Apple and Microsoft.

With nearly 30 billion ARM-based chips produced each year, it’s the most widely adopted processor architecture in the world. This expanding ecosystem will continue fueling ARM’s growing competitive advantage, as the company explains in its financial filings:

The breadth of our ecosystem creates a virtuous cycle that benefits our customers and deeply integrates us into the design cycle because it is difficult to create a commercial product or service for a particular end market until all elements of the hardware and supporting software and tools ecosystem are available.

Beyond this ecosystem, ARM’s other competitive advantage lies in its massive intellectual-property portfolio that includes 7,400 issued patents and 2,500 pending patent applications. This is a result of its heavy emphasis on research, with an industry-high 83% of ARM employees working in research, design, and technical innovation.

This has solidified ARM’s role as the global leader in RISC-based CPU architectures by a wide margin. And its expertise in this energy-efficient chip design has made it the go-to choice for the world’s largest data-center operators. This includes Amazon, Microsoft, and Google – all of whom are racing to create their own data-center chips. Consider a few examples…

Last November, Amazon Web Services (“AWS”) announced it would use ARM’s Neoverse V2 core inside of its fourth-generation Graviton series of CPUs for its cloud-computing infrastructure. The ARM Neoverse is based on the same ARMV9 architecture used in Nvidia’s Grace CPU.

A report from Amazon based on customer testimonies of AWS users shows that the Graviton delivers 20% to 70% cost savings versus comparable x86-based chips from Intel and Advanced Micro Devices (“AMD”). Amazon has become a major partner and customer of ARM, and reportedly makes up 50% of the demand for ARM-based data-center CPUs. Amazon has partnered with ARM since 2018 on the Graviton, and is now working on its fifth-generation ARM-based Graviton chip.

In April, Google announced it was developing a custom ARM-based CPU for AI workloads in its data centers. Named Axion, the CPU is already running Google’s internal AI workloads for optimizing things like its YouTube ads. It plans to roll out the chips to its business customers later this year. Google has said that the Axion chip will deliver 50% better performance than comparable x86 chips produced by Intel.

Microsoft is also using ARM-based designs in its Ampere Altra CPU processors for its Azure cloud-computing engines. The software giant has reported a 50% increase in “price performance” (i.e., performance for comparably priced chips) relative to comparable x86 chips.

The bottom line: ARM’s energy-efficient RISC CPU architecture is rapidly gaining market share in today’s energy-intensive data centers. By delivering roughly 50% better performance than the traditional x86 CPU architecture, ARM is rapidly disrupting Intel’s former dominance over the data center. As a result, ARM’s market share in data-center CPUs has more than tripled from just 3% in 2020 to 10% this year, while Intel is losing share:

This trend is still in the early innings, and it’s expected to accelerate in the years ahead. Industry analysts expect ARM’s share of data-center CPUs will double to 22% next year, and reach 50% by 2030.

Finally, there’s another new source of demand for ARM-based chips in the burgeoning market for AI-enabled personal computers. Unlike previous AI applications, like ChatGPT that run through the cloud, AI computers enable users to run AI applications on their personal computing devices. Analysts estimate that AI-based PCs could make up 40% of global shipments as early as next year. .

ARM is currently working with over half a dozen companies to implement its RISC architecture into chip designs for AI-enabled personal computers. These include Nvidia, Microsoft, Qualcomm, Apple, Lenovo, Samsung, and Huawei.

AI PCs could hold the future for ARM to massively expand its presence into the PC market, given the advantages of its RISC-based architectures over the energy-hungry x86-based chips that currently dominate the PC market.

ARM has already made significant inroads into displacing the x86 architecture in PCs through its long-running partnership with Apple. In 2020, Apple announced it would begin transitioning away from Intel’s x86 processors to ARM-based M1 Apple Silicon Chips across its line up of PCs including MacBook Air, MacBook Pro, Mac Mini, and iMac. The M1 chips (and subsequent iterations) have been a massive success for Apple, delivering substantial improvements in performance and battery life.

A Wonderful Business – But at a High Price

ARM generated $3.2 billion in revenue from the 28.6 billion chips produced from ARM-based architectures last year – an increase of 21% year-on-year. On the surface, this revenue figure may seem small relative to the number of units sold – with ARM receiving roughly 12 cents on each chip sold based on its device architecture.

But the key to ARM’s business model is that it requires virtually no capital investment, because ARM doesn’t manufacture anything. It’s purely a technology company that creates the blueprints for chip architectures. It then licenses those blueprints to other companies, like Nvidia, which design their chips based on ARM’s architecture. ARM receives an upfront licensing fee, plus a small royalty on every chip sale in perpetuity.

The beauty of this business model is that ARM can create a blueprint once, and sell that same blueprint (or a slightly modified version) to multiple companies. And it collects a perpetual revenue stream for as long as the chip remains in production, which can be for decades. ARM today is earning royalty revenue on chip architectures it designed in the 1990s.

That’s the ultimate version of “mailbox money” – consistent incoming revenue that requires no operating expenses or investment, translating into pure free cash flow. This makes ARM one of the most capital efficient businesses in the world, with the company spending less than $100 million in capex each year to produce over $3 billion in revenue. As a result, ARM generates roughly $1 billion in annual free cash flow, for a stellar 31% margin.

With booming demand for ARM’s data center CPUs, the company’s revenue is expected to grow 23% in each of the next two years, up from 21% growth last year. This puts its 2026 expected revenue at $4.9 billion. These cutting-edge CPU chip architectures command above-average margins, which will propel ARM’s profit margins toward a record high 48% in 2026. As a result, ARM’s earnings per share are on track to reach $1.55 this year and $2 in 2026, up from $0.32 per share last year.

At its current price of around $170, that gives ARM shares a forward price-to-earnings multiple of 85x. Even with its rapid growth rate, enviable profit margins, and dominant industry position, ARM shares are too expensive for our liking at the moment.

ARM shares have become swept up in the speculative mania propelling virtually every AI-related company to new record highs in recent months. As Porter recently noted on X (Twitter), the current market backdrop sets the stage for a 50% or greater decline in the tech-heavy Nasdaq from here:

So while there still may be near-term upside from the momentum-fueled mania, the downside risks outweigh the long-term returns at current prices. When the dust settles on this speculative frenzy, patient investors will be rewarded with more attractive entry points in ARM shares, along with our other parallel-computing recommendations. For now, we’re putting ARM on the watchlist, and we’ll continue monitoring the company’s developments in the meantime.

Stay tuned for future updates.

This content is only available for paid members.

If you are interested in joining Porter & Co. either click the button below now or call our Customer Care team at 888-610-8895.