Editor’s Note: On Tuesdays we turn the spotlight outside of Porter & Co. to bring you exclusive access to the research, the thinking, and the investment ideas of the analysts who Porter follows.

If you’re new to The Big Secret on Wall Street and want to learn more about this membership benefit, you can read all about it here or check out our previous Spotlights here.

Slaughtering sacred cows is dirty work.

And it’s also deeply unpopular work.

That’s because when you question someone’s beliefs, they often take it personally. They think that you’re attacking them… and not only just their thinking.

But I love it. In fact, I’ve made a career of getting bloody up to my elbows as I’ve culled sacred cows… like in 2002, when I warned that General Electric (GE), at the time the most valuable publicly traded company in the world, was doomed… when, four years later, I said that General Motors (GM) would go bankrupt…and in 2008, when I wrote that Fannie Mae (FNMA) and Freddie Mac (FMCC) would go to zero. And today… when I’m saying that American aerospace icon Boeing (BA) is going to go bust.

I’m mentioning this because you don’t find this willingness to stare down know-nothing critics in the name of speaking the truth very often. And it’s one of the things I like the most about my friend Jeff Brown.

Jeff isn’t only one of the best stock pickers I’ve ever worked with… for example, Jeff was recommending tech investments like Bitcoin in 2015 (up ~27,000%) and Nvidia in 2016 (up ~11,000%), among many others.

He’s also a tech visionary. He has an uncanny ability to discern trends well before they unfold… and become part of the conventional wisdom.

And maybe most importantly… Jeff, like me, relishes taking on those sacred cows before whom everyone else is genuflecting. He calls it like he sees it – critics clutching their pearls be damned.

And now… Jeff is doing it again. He’s forecasting an unprecedented crash in what’s been one of the market’s hottest sectors, artificial intelligence (“AI”), that will send many in-the-headlines AI stocks down by 50% or more… including one that he calls “AI’s Most Toxic Ticker” that, if it’s in your portfolio, you should sell at once.

That ticker is part of a special presentation Jeff has put together that I’m excited to announce we’re giving Porter & Co. subscribers a special sneak preview right here. That’s right… this hasn’t been released anywhere else yet, and it won’t be until tomorrow (Wednesday) night.

And there’s one stock that Jeff believes will be immune to the coming AI crash… which he’s written about below (and also here).

At least as importantly, in his presentation Jeff will be disclosing how you can use the upcoming crash in AI shares to profit, using a new proprietary platform that he’s developed. And Porter & Co. subscribers are getting access to a special pre-release price.

This “Bulletproof” AI Player Will Be Essential In The Data Center Buildout

The late 1990s marked a major turning point for the internet…

Global internet traffic exploded from about 44 million users in 1995 to nearly 281 million by 1999. It was a massive surge – like nothing the world had ever seen before. And internet infrastructure wasn’t anything like what we have today. It wasn’t built to handle the flood of traffic.

So, we saw a rapid build-out of fiber optic cables connecting telecommunications hubs to data centers, which allowed all of us to access the internet.

And all that new traffic needed to be directed to the right places – whether it was to America Online, AltaVista search, eBay auctions, or Napster to download music.

The devices that handled this job were called routers. For nearly 40 years now, one company has dominated this space: Cisco Systems.

At the time of the internet surge, Cisco owned about 80% of the market share for various kinds of routers. But the industry was hungry for alternatives. They needed competition, not just to drive innovation but also to keep prices in check.

Enter, Juniper Networks.

Its founder, Pradeep Sindhu, knew he was coming into the game late. The idea for Juniper didn’t take shape until 1996, well after the internet boom had started. Cisco – founded in 1984 – had a 12-year head start.

But Sindhu found his niche: high-end routers – the kind that telecom companies used as the backbone of the internet.

This was a genius move for two reasons.

First, by focusing on high-end routers, he could attract early-stage funding from big-money potential clients like Ericsson, Lucent Technologies, and Nortel Networks.

Second, the market for big internet protocol (IP) routers – the ones directing traffic for large communications companies – was growing at an astonishing 150% annual rate.

When a market grows that fast, there’s plenty of room for multiple companies to succeed.

Juniper didn’t need to knock Cisco off its perch. It just needed to show it could produce routers as good as, or better than, Cisco. The sales would follow.

The reality is that the market was expanding so rapidly and would keep expanding for many years to come… there was plenty of room for both players.

Juniper’s strategy worked right out of the gates. It grew from $3.8 million in revenue in 1998 when it launched its first product to $887 million by 2001.

Investors in both companies made out like bandits during that time. Most of us have heard Cisco’s success story… It skyrocketed more than 100,000% from 1990 to its peak in April 2000. It was even the largest company by market cap for a short while.

Juniper investors did quite well too. Kleiner Perkins, the storied venture capital firm, was an early investor in Juniper… and reportedly saw a 230,000% gain on that investment. Public shareholders did well too – Juniper’s IPO in June 1999 to its peak in October 2000 saw gains of 4,188%.

And here’s the kicker: Juniper never had to displace Cisco.

Its products became just as big of a part of the internet’s backbone as Cisco’s, and their products worked side by side routing bits of data to the right locations all over the world.

This is important to remember because, this month, we’re spotlighting another chipmaker that’s been essential to the artificial intelligence (AI) boom…

Advanced Micro Devices (AMD).

We recently talked about NVIDIA (NVDA) and how it has the dominant position in AI GPUs – specifically in the training of LLMs. It has an estimated 90% of the AI GPU market, while AMD has the rest.

But here’s the thing – NVIDIA’s GPUs are designed to work hand-in-hand with AMD CPUs. Neither company will say it out loud, but in many ways, they’re practically partners.

Companies that manufacture AI servers offer a powerful combination of AMD EPYC CPUs and NVIDIA H100 GPUs. This pairs what many consider the best CPU with the fastest GPU.

The AI data center market is growing fast enough for both NVIDIA and AMD to profit immensely… And just as Juniper made a name for itself in the rise of the internet, AMD is carving out a not-so-little niche for itself in the data center buildout.

The Brownstone Research Mission

Since my return to the helm at Brownstone Research, we’ve revisited the investment thesis for recommendations I made. I didn’t want to delay our investment in some of the best large-cap companies in the world.

In my first week back, we sent out investment alerts for Uber (UBER), NVIDIA, and AMD. And over the past couple months, we’ve dug deeper into what exactly has been driving these stocks higher.

The main theme behind these investments is the advancements in artificial intelligence technology.

Now, some readers have written in asking why we’re putting such a strong focus on AI…

The answer is straightforward: AI is attracting more investment capital than any other trend. And it’s not just investors pouring money into AI. Companies are also positioning themselves to capitalize on the massive adoption of this technology.

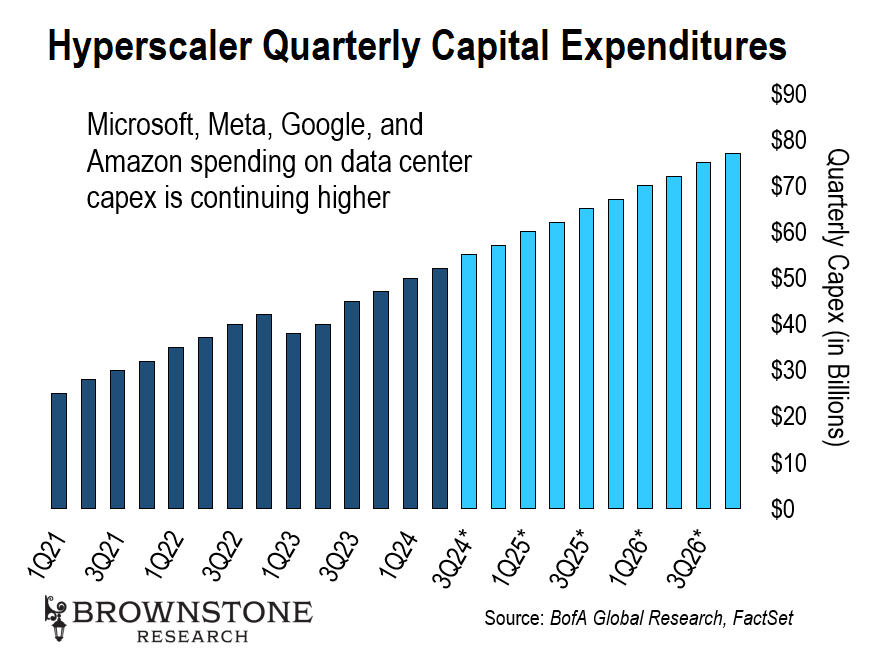

The total capital expenditures (capex) of just the big four hyperscalers – Microsoft (MSFT), Meta (META), Google (GOOG), and Amazon (AMZN) – exceeds $50 billion each quarter. And as we can see from this chart, capex is still on the rise.

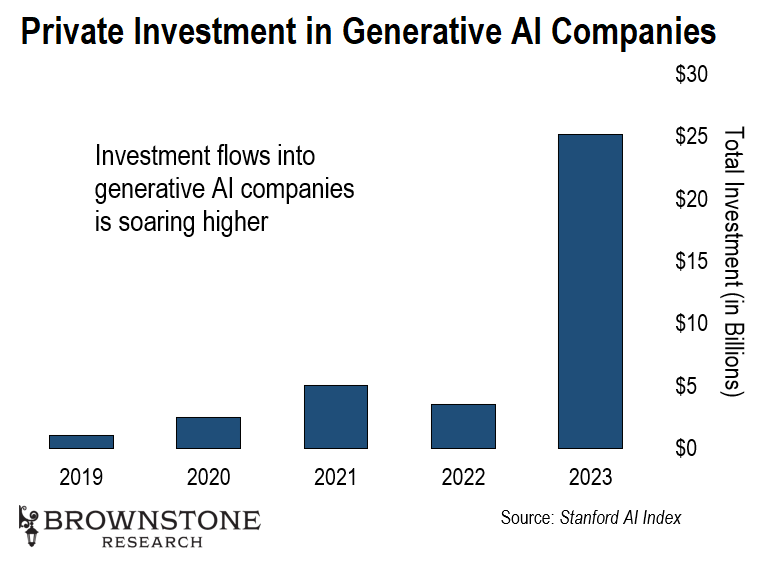

Investment flows are surging as well. Take a look at the investments made into private generative AI companies since 2019.

To no surprise, investments in artificial intelligence companies will be even higher in 2024. Q2 saw more than $27 billion invested into private AI companies alone. For the full year, I expect the total amount invested into private AI companies to be over $100 billion.

This includes over $7 billion raised by Anthropic and $6 billion from x.AI, as well as another $6.6 billion raised in OpenAI’s most recent funding round in October.

These record levels of investments into private companies in the AI space bode well for public companies that are providing technology for the industry.

Many of the top-performing companies of the year are AI-related. NVIDIA, for example, is the top performer in the S&P 500 with a more than 190% gain, year to date.

Even energy companies like Vistra (VST), Constellation Energy (CEG), and NRG Energy (NRG) have seen a boost thanks to future AI data center power demands, landing them among the top 10 performers of the year.

As investors, we want to follow the money. And right now, it’s flowing into AI companies.

The only other investment trend that even comes close to the scale of AI is the surge in GLP-1 fat-loss drugs. These drugs aren’t just helping people lose weight – they’re also treating diabetes symptoms, aiding with addictions, and might even slow the progression of Alzheimer’s.

These are the hottest drugs on the market right now.

But the leader in this space, Eli Lilly (LLY), trades at a hefty 85x EV/EBITDA ratio. We need to see a pullback before we can safely initiate a position in this company.

Right now, it’s all eyes on the artificial intelligence boom…

AI Is Just Getting Started

I can’t emphasize enough how huge the GPU market could become – growing far beyond what many might expect.

The scale of the data centers being built right now is staggering.

And these footprints are only getting larger. Take Microsoft’s Project Stargate, for instance – a $100 billion AI data center slated for completion in 2028. That’s nearly three times what Microsoft spent on all its capital expenditures in 2023.

I recently visited Microsoft’s $1 billion supercomputer data center, currently under construction in Mt. Pleasant, Wisconsin.

When finished, it’s expected to span 1,030 acres – that’s the equivalent of 780 football fields or 1.6 square miles. Now, a $100 billion data center won’t have a footprint 100 times bigger, but even 10 times the footprint would be truly mind-blowing.

Looking ahead a few years, it’s not out of the question that we could see a $1 trillion data center built in the 2030s to fully harness the economic power of true artificial intelligence. This will likely be required to develop an artificial superintelligence (ASI).

The key point is that these massive AI-specific data centers are multibillion-dollar, multi-year projects. And they’re going to continue no matter what happens with the economy, interest rates, or the U.S. election.

No matter what we’re concerned about in the months and years ahead, investing in sectors where money is flowing, no matter what, is a smart move.

Nothing short of a catastrophic event would delay this spending. The prize is just too big.

Artificial General Intelligence (AGI) Is the Ultimate Prize

In the short term, all the major hyperscalers are in a race to achieve artificial general intelligence (AGI).

AGI is the next monumental leap in artificial intelligence. Unlike the AI systems we’re used to today – those designed for specific tasks like image recognition or natural language processing – AGI is a form of intelligence that mirrors human cognitive abilities.

It’s an AI that can learn, reason, and apply its knowledge across a broad range of tasks, adapting to new situations just as a human would.

Today’s AI can excel at particular tasks like beating a world champion at chess or translating languages. AGI would have the capability to understand and perform any intellectual task that a human can. And it would do so with greater speed and accuracy.

But this isn’t just about processing more data. It’s about creating an AI that truly understands the world around it and can make decisions in real-time, in any context, without needing specific programming for each scenario.

AGI is the ultimate prize of AI research. A machine that can think, learn, and reason like a human, but with the ability to improve itself continuously. And AGI will be capable of performing research and development autonomously around the clock.

This kind of intelligence could revolutionize industries, solve complex global challenges, and transform the way we live and work.

It’s not going to be just one company that gets to AGI. It will be multiple companies with competing AGIs.

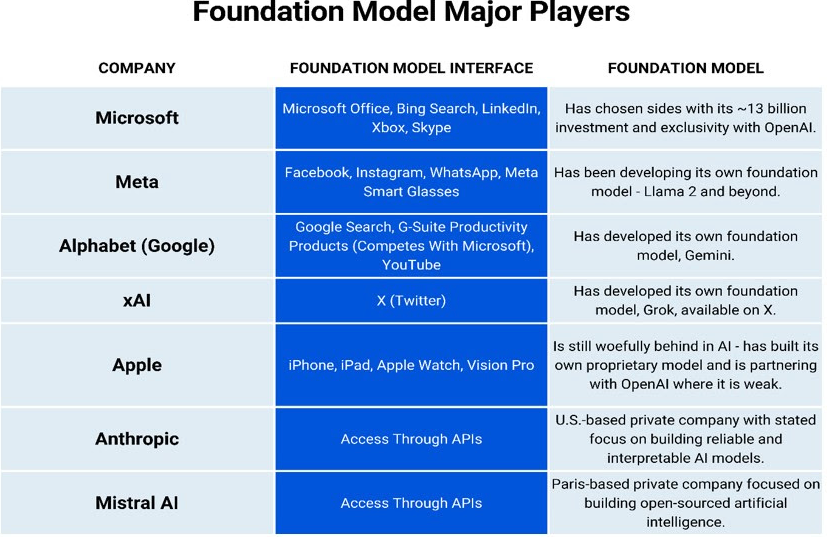

Currently, we have seven major players (ex-China) with foundational models. Five of them have well-established user interfaces – a technical term I use to describe their existing products that will act as immediate distribution for their AI technology.

The performance of these models is tracked in real-time and measured against industry standard benchmarks. We have yet to see a “perfect” model. Some are better than others at certain tasks. But I’m predicting these companies will all have their own AGI. They all have the capital resources, human resources, and technology to achieve AGI.

The economic benefits of running an AGI will be astronomical. This will justify increased spending on AI by every company in the world… Which will justify even more spending on AI data centers. This will be the largest productivity breakthrough in history.

The other thing for us to keep in mind is that some of the most advanced research is published in the open. As are open-source foundational models like Meta’s Llama 3.1 – which is performing almost on par with the most advanced model from Anthropic’s Claude 3.5 Sonnet.

This allows anyone knowledgeable enough to utilize top-quality AI models on their own servers with their own hardware with their own private data.

Providing server equipment for companies running private instances of these large language models (LLMs) or smaller LLMs is already a multibillion-dollar industry.

It’s Not AMD vs. NVIDIA… It’s AMD and NVIDIA

Too often, financial pundits pit these two companies against each other. But that’s the wrong perspective. In reality, the offerings from AMD and NVIDIA often complement each other in significant ways.

The NVIDIA H100 is the gold standard for AI GPUs. But when it comes to server CPUs, AMD’s EPYC processors have set the benchmark.

Consider this: Nine of the world’s 30 fastest supercomputers pair AMD CPUs with NVIDIA GPUs. These two components were designed to work together seamlessly.

Many data centers purchase their servers from other companies – like SuperMicro (SMCI), Dell (DELL), HP Enterprises (HPE), Lenovo, Asus, and Gigabyte. All these major server providers offer configurations that combine AMD CPUs with NVIDIA GPUs.

Neither company is standing still. NVIDIA has recently developed its Grace CPUs – its breakthrough CPU superchip that is, “designed to meet the performance and efficiency needs of today’s AI data centers.” And they are directly integrating them with their Hopper H100 GPUs in their Grace Hopper offering.

Once again, this isn’t a direct threat to AMD.

Both AMD and NVIDIA are likely to expand their share of the server CPU market. Right now, AMD controls 24.1% of that market, while NVIDIA’s share is next to nothing. The other 75% is held by Intel (INTC).

The fact that Intel still has that much of the CPU server market is quite astonishing. They have bungled every release of server CPUs in the past decade. It started with the two-year delay of their 14nm (nanometer) Broadwell processors in 2014. Every release since has been nearly two years delayed past their planned release date.

And Intel’s latest chips, the 4th-generation Xeon series called Sapphire Rapids are built on a 10nm node. That’s two generations behind AMD’s latest Genoa EPYC CPU which is built on 5nm technology.

Having a smaller node makes the semiconductor faster and more energy efficient. AMD’s EPYC outperforms Intel on most general-purpose workloads.

The reason Intel has been able to hold onto this market share is because it’s a painful process to switch. And many enterprise applications and systems are optimized to use Intel’s architecture just because they were there first. And for non-time-sensitive applications, Intel’s CPUs still work fine.

But the future of data centers is changing. Here’s what NVIDIA CEO Jensen Huang said about it…

Every single date center… 100% of the trillion dollars’ worth of infrastructure… will be completely changed. And there will be new infrastructure built on top of that.

If data centers don’t upgrade their network and computing infrastructure, they won’t be able to handle the coming AI workload.

Since these companies will be rebuilding new data center infrastructure from the ground up, they’ll be rethinking their loyalty to the laggard, Intel. And AMD will continue to eat into Intel’s market share.

As I said above, the AMD EPYC CPUs have the best benchmark performance. NVIDIA’s Grace CPUs are comparable to Intel’s Xeon… But that’s just in the benchmarking test.

NVIDIA is likely to have optimized compatibility between its Grace CPU and its GPUs. That means in the real world, their CPU will outperform Intel’s as well.

AMD has been eating Intel’s lunch for years now. In 2017, they only had 0.8% of the CPU server market, now that number is approaching 25%. And NVIDIA is about to start carving out its own small slice of this market, but nowhere near AMD.

When it comes to the server compute market, competition is essential. Just like the industry welcomed Juniper’s competition to Cisco in the 1990s, today’s market wants more than one CPU and GPU vendor.

The industry needs and wants competition between at least two companies to keep prices in check and to drive forward the capabilities of GPUs. This year, both NVIDIA and AMD increased the cadence of their upgrade cycles from 18-24 months down to just 12 months. Now, each company will release a new GPU every year, pushing each other to new heights.

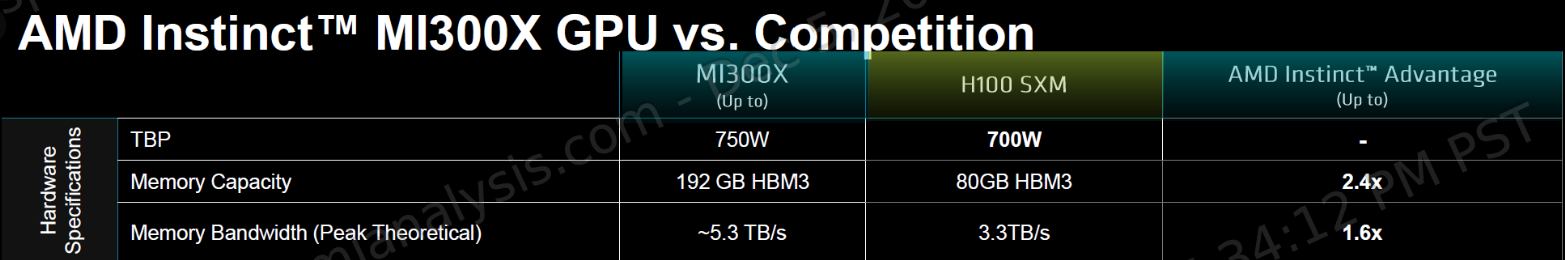

Currently, AMD’s flagship server GPU is the MI300X, their answer to NVIDIA’s H100. But AMD isn’t trying to overtake NVIDIA for training large language models (LLMs)…

Training vs Inferencing

Where AMD is already carving out a lead for itself is in inferencing.

When deploying a model like ChatGPT, OpenAI must first train it and then release the model to answer our questions.

The work that the AI model does to respond to our queries is called inference. We can think of inference as the model “thinking.” It makes predictions about what we want based on what was learned from training. A simpler way to think about inference is as the running of AI applications (as opposed to the training of an AI).

Since inferencing happens after training, AMD made a smart move focusing on this area. Especially when considering the fact they lag behind NVIDIA in training. Also, the growth in inference compute is expected to outpace training compute in the coming years. One firm predicts demand for inference compute will grow 90% annually through the rest of the decade.

The reason for that is twofold. First, as adoption increases it’ll require more compute to handle the increased requests. Second, these generative AI models are creating more complex outputs.

AMD has designed its latest MI300X GPU specifically to handle these inferencing tasks efficiently. The MI300X boasts 2.4 times the memory capacity and 1.6 times the memory bandwidth…

Having more memory is crucial because it allows larger models to fit entirely on the memory of a single GPU. For instance, models like Mistral AI’s Mixtral 7x8B LLM, which is popular in production workflows, won’t fit on a single H100 GPU but can fit on one MI300X GPU.

This makes the MI300X far more efficient for inference tasks. That’s why AMD is seeing increased adoption from major players like Microsoft, Amazon, and Google. In fact, AMD noted that over “100 enterprises and AI customers are actively developing or deploying the MI300X.”

In Microsoft’s July earnings release, CEO Satya Nadella said:

I am really excited to share that we are the first cloud to deliver general availability of VMs (virtual machines) based on AMD’s MI300X AI accelerator. It’s a big milestone for both AMD and Microsoft. We’ve been working on it for a while, and it’s great to see that today, as we speak, it offers the best price-performance on GPT-4 inference.

Performance like this is what will drive AMD’s business forward and push its stock price higher. AMD CEO Lisa Su mentioned that the MI300X AI GPUs were the “fastest-ramping product in AMD history.” They sold over $1 billion worth of these chips in less than two quarters.

Now, this isn’t to say that AMD is lagging in training – it’s actually running at comparable speeds to the H100 on many benchmark tests.

And AMD powers the world’s fastest supercomputer. The Oak Ridge National Laboratory’s Frontier supercomputer uses AMD CPUs and GPUs to process data…

But AMD should brace for competition from NVIDIA. Estimates suggest that NVIDIA’s new Blackwell GPUs will show a 30x improvement in inference… something AMD will likely match with its MI350X release next year.

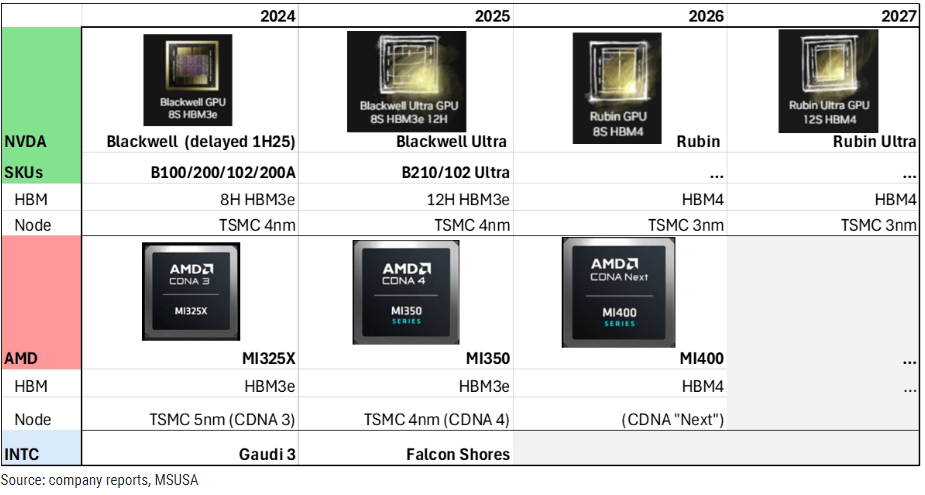

Here’s a chart of the product roadmap for both AMD and NVIDIA AI GPUs.

We should expect this technological leapfrogging to continue for years to come. And that’s going to be great for both companies as AI data centers scramble to equip themselves with the latest and greatest GPUs for training models and running inference.

AMD’s New $10 Billion Niche

GPUs are undoubtedly going to be a massive business for AMD in the years ahead. But they aren’t the only chips that handle inference tasks.

AMD is a leader in field programmable array (FPGA) chips. Long-time readers may remember former portfolio holding Xilinx (XLNX). We owned Xilinx for its FPGA portfolio and held the shares when AMD announced its intention to buy the company.

Xilinx, now under AMD, controls about 50% of the FPGA market, making it the clear leader in this space. FPGAs are incredibly versatile chips. As the name suggests, these chips are programmable, allowing them to efficiently run a wide range of tasks.

This versatility will be crucial for non-time-sensitive tasks in data centers, like inference. But it also positions AMD as a major player in AI at the “edge” of networks. The “edge” is an industry term to describe when technology is working in devices out in the field, like AI technology embedded in our smartphones, autonomous vehicles, smart homes, robotics, and more.

The FPGA market alone is projected to grow at a rate of 16.4% annually, reaching $25.8 billion by 2029. If AMD can maintain its 50% market share, this implies that its embedded unit could grow from $3.6 billion this year to over $12 billion by 2029. That’s growth no one’s really predicting… but I believe it’s likely as AI compute moves out to the edge.

Valuation

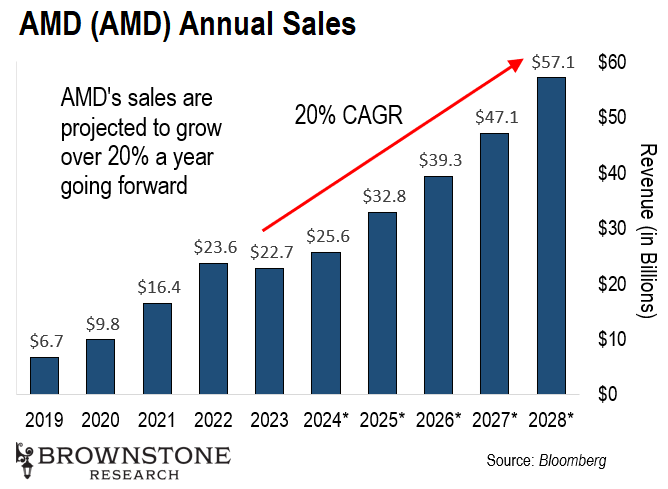

To get to our valuation, let’s first start by looking at revenue growth projections. As the chart below shows, current projections are for AMD’s revenue to continue growing 20% a year through the end of the decade.

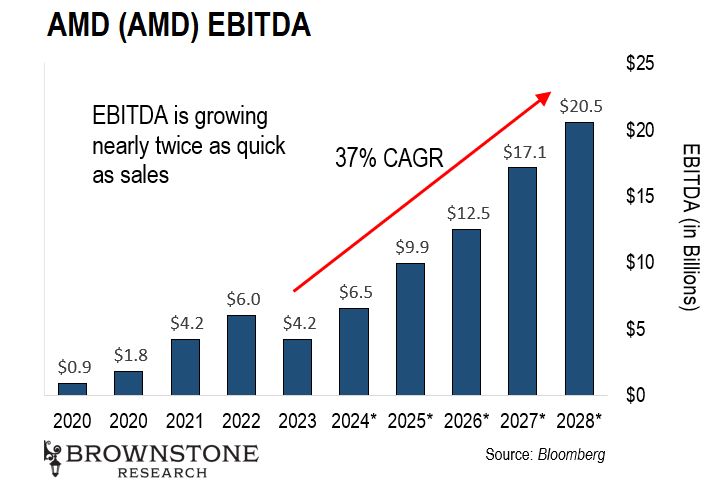

And even more impressive is the projected EBITDA growth over the same period, 37% a year.

And even more impressive is the projected EBITDA growth over the same period, 37% a year.

This kind of growth allows us to pay a high multiple today but still make above-average returns. But AMD isn’t that expensive today when we look at its EV/EBITDA ratio.

This kind of growth allows us to pay a high multiple today but still make above-average returns. But AMD isn’t that expensive today when we look at its EV/EBITDA ratio.

It currently trades 36.1x 2024 estimated EBITDA. That’s reasonable for a company projecting to grow EBITDA by 37% annually. I’d even venture to say it’s undervalued.

But in the spirit of remaining conservative with our expectations, we’ll keep the valuation multiple the same going forward.

By the end of 2026, the consensus estimate for AMD’s EBITDA is $12.5 billion. Using a 36x multiple we get an enterprise value of $450 billion – about a 90% gain in just 16 months.

Action to Take: Buy AMD (AMD) up to a price of $180 a share.

Risk Management: At the time of publication, AMD (AMD) is not in the portfolio, so we will not provide regular updates unless we officially add it. If anyone purchases AMD, make sure to use a volatility-adjusted trailing stop.

The percentage stop may change as market conditions change. The current stop loss is 43% for anyone wishing to enter that into their risk management calculations. The exact dollar amount of everyone’s stop loss will vary depending on their entry price. I will rarely use a set dollar amount for a stop because, in the past, hedge funds have pushed prices down to our stop, forcing us to sell, so they could establish a position at a lower price.

See a PDF of today’s Spotlight here.

Regards,

Jeff Brown

I’m thrilled that Jeff is making this kind of big call about a number of popular AI stocks… because I believe it will save his readers, including Porter & Co. subscribers, a lot of money. And Jeff’s system to generate profits in the upcoming AI crash is fantastic.